What is Liubo(六博)?

One of the oldest recorded board games, Liubo, 六博, literally "six sticks" in Chinese, has a mysterious origin and even more mysterious disappearance. Emerging early in Chinese history, it was the most played game during the Han Dynasty and remained so until the emergence of Go, 围棋, in ancient China. In spite of its abundant preservation in the archeological record, and numerous historical writings describing the game, the exact rules of the game remain unknown to this very day.

Liubo: Resurrection

Fortunately, we need not leave this game in perpetual obscurity. Jean-Louis Cazaux, an author of many books on the history of chess and ancient board games, has done an extensive study of the historical and archeological record and posited a reconstruction of the rules. Furthermore, he has subjected these rules to extensive playtesting to discover a game that is quite entertaining to play.

After some exchanges with the author I've been able to implement the game he envisioned on the web with both multiplayer and AI capability.

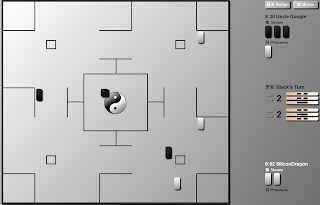

To play the game, you first sign in using an OpenID such as Google or Yahoo. Then you press "Play" and you will be placed into the game queue for another opponent. If you wish, you may press "Skip to AI" and play the AI instead. Then you will randomly play as either white or black, white goes first as in chess. Two sets of yin-yang sticks are then thrown automatically on the right side of the screen, one mark for yin and two marks for yang. Total the yang plus one for each set of three sticks, and you have your move number, for instance 3-2. This means you move 3 the first move, then 2 the second. To move, you may either enter a stone onto the board at the move number, or if the piece has not moved this turn, you may advance the stone. Advancements go counter-clockwise around the board; press the Moves button to see the move order. At two special positions, numbers 6 and 11, you may move inward towards the center and across the board. If you land directly on the center, your piece is promoted to an "owl" if you don't have one already. This owl can then capture other pieces and put them into your prison; for regular stones, captures only return the piece to the other player. You may have only one owl at a time. Capture all the opponent stones and you win; capture the opponent owl if you have no owl and you also win; have five stones on the board and no owl while your opponent has an owl and you also win; let the other side run out of the 10 minute move clock and you also win. After each game, your elo rank and that of your opponent (including the AI, "SiliconDragon") is adjusted. You may see the top rated players with the Ratings button. You can find the game here:

HTML5 Multiplayer Design

When starting the design, I first did a static layout of the board in HTML and CSS. I wanted a design that would scale to different screen sizes so I could use it on iPad as well as various desktop sizes. Also I wanted it to work on various browsers including IE8, so I focused on using CSS drawing techniques with borders and screen percentages and tested it on various platforms. Using gradients and fallback CSS I was able to make the board, throwing sticks and pieces without using any images at all.

Once the layout was done, there remained the huge effort of programming. There are many options in creating an interactive HTML5 app today, from WebSockets, to Flash compilation to Canvas, to game-specific libraries such as Impact.js. For speed and compatibility, I chose to use div-based HTML5 javascript with jQuery. Although node.js shows promise, I prefer the established ease of use of Google AppEngine and its python backend, so I went with that. Linking the client and server is the newly released Google Channel API.

Implementing a Google Channel API Client

The trickiest part of the whole project was getting the Channel API to work properly. My first mental reset was realizing it is a unidirectional, not bidirectional, API. That is, the Channel API requires that the server give a channel token to a client and then the client connect to the server, however only the server is allowed to send messages to the client. The client is not allowed to send messages to the server over the channel. Instead, the client must use normal HTTP requests to the server which then must be matched to a client token whereby the server can send a response over the channel.

Illustrating this procedure, first here is a python snippet initiated by client request for a new channel. It runs on the appengine and creates a new channel, stores it to the datastore, and then returns the channel token along with other user data in a JSON:

def put_channel(self, user, profile):

profile.channeltoken =

channel.create_channel(make_channel_id(user.federated_identity()))

profile.channeldate = datetime.now()

profile.put()

profile_json = {

'screenname': profile.screenname,

'character': profile.character,

'rating': profile.rating,

'rank': compute_rank(profile.rating),

'numgames': profile.numgames,

'dateadded': profile.date_added.strftime('%Y-%m-%d'),

'logoutURL': users.create_logout_url('/'),

'channeltoken': profile.channeltoken

}

self.response.out.write(simplejson.dumps(profile_json))

On the javascript side, we receive this channeltoken, set our channel id to this token, and then create a new channel. We then open a socket and setup socket handlers. The socket open handler requests a new game to join. The socket message handler processes each message sent from the server, such as starting a multiplayer game and receiving moves from an opponent.

self.channel = new goog.appengine.Channel(self.channelid);

self.socket = self.channel.open();

self.socket.onopen = self.socketOnOpened;

self.socketOnOpened = function() {

// give it a chance to startup

setTimeout(game.requestJoin, 5000);

};

self.socketOnMessage = function(message) {

// console.log('received message data:'+message.data);

var messageArray = $.parseJSON(message.data);

if (!messageArray) {

alert('invalid message received: ' + message.data);

return;

}

for (var i=0; i<messageArray.length; i++) {

var messageline = messageArray[i];

var command = messageline.shift();

var data = messageline;

self.commandProcessor.processCommand(command, data);

}

};

Going again back to the server-side python code, we create a simple function for sending messages to the client via the channel. When creating a game, we first create a unique gamekey, then send it to the channel. Another important point to note about the Channel API is illustrated here: the server must have a different unique channel to each client it wishes to connect to. There are no broadcast channels or multiple subscribers; it is strictly one-to-one. So the server has created two channel ids, one for the white side and one for the black side, upon client connect. Then as the game progresses, messages are sent from the server to each client via its own respective channel. A unique gamekey, sent by each client during a request, allows the server to connect a request to a particular game, and thus provide a link between two clients in a multiplayer game:

def send_message(self, channelid, json):

logging.info('sending message to:%s payload:%s',

channelid, simplejson.dumps(json))

channel.send_message(channelid, simplejson.dumps(json))

def create_game(self):

self.create_gamekey()

game = Game(parent=gamezero)

game.gamestatus = 'WJ' # waiting for join

game.gamecreated = datetime.now()

game.gamekey = newline_encode(self.gamekey)

game.gamestatus = 'GS' # game started

game.gamestarted = datetime.now()

game.put()

self.send_message(self.black_channelid, [['PB'],

['OP',game.white_playername],['GS',self.gamekey]])

# game started, white's move

self.send_message(self.white_channelid, [['PW'],

['OP',game.black_playername],['GS',self.gamekey],['WM']])

Again returning to the client javascript side, we receive the new gamekey and begin the game. Each time after the client player moves, an HTTP POST request is sent to the server. This request contains the gamekey and the moves for this turn. We don't wait for any reply from the server to our request; instead, our previously established socket listener waits for any commands to be received via the channel API, namely, the other players moves. This methodology is how the Channel API is meant to be used in interactive applications.

self.startGame = function(data){

$('#gamejoinstatus').html('Starting game...');

clearTimeout(self.joinTimeout);

game.gamekey = data[0];

game.startGame();

}

self.sendMoves = function(moves) {

var params = {

gamekey: self.gamekey,

moves: $.JSON.encode(moves)

};

// console.log('POST moves to server:'+params.moves);

$.post('/games', params)

.error(function(){

$('#throwturn').html('Unable to send move');

});

}

Returning to the python server side, we find our move processor. After receiving the HTTP POST request from the client with the game key and validating moves, we simply relay the move to the other player via the other player's channel id. In addition, we check for a validated game over condition:

def post(self):

self.user = users.get_current_user()

self.request.get('gamekey'), self.request.get('moves'))

self.gamekey = self.request.get('gamekey')

if not self.user or not self.gamekey:

self.error(403)

elif self.request.get('moves'):

self.moves = simplejson.loads(self.request.get('moves'))

if self.decode_gamekey():

self.process_moves()

else:

self.error(403)

def process_moves(self):

# just relay moves to the other side

self.send_message(self.other_channelid(), self.moves);

for move in self.moves:

command = move[0]

if command == 'GO': # gameover

status = move[1]

winner = move[2]

reason = move[3]

self.process_gameover(status, winner, reason)

break

Lastly, let's see how we actually process moves on the client side. I've adopted a simple json-based symmetrical send/receive protocol, so all messages back and forth are sent as json-encoded lists. Each item in the list is itself a list, consisting of a two-character string command code and zero or more data items. The heart of this is the client command processor that receives each different command type from the server and then dispatches it to the appropriate handler:

self.processCommand = function(command, data) {

// console.log('Command and data received: '+command+' '+data);

switch (command) {

case 'WJ': self.waitForJoin(); break;

case 'OP': self.setOtherPlayer(data); break;

case 'PW': self.setPlayer('white'); break;

case 'PB': self.setPlayer('black'); break;

case 'GS': self.startGame(data); break;

case 'GO': self.gameOver(data); break;

case 'WT': self.receiveThrow('white', data); break;

case 'BT': self.receiveThrow('black', data); break;

case 'WM': self.takeTurn('white'); break;

case 'BM': self.takeTurn('black'); break;

case 'WS': self.moveStone('white', data); break;

case 'BS': self.moveStone('black', data); break;

case 'WP': self.promotionMove('white', data); break;

case 'BP': self.promotionMove('black', data); break;

case 'WB': self.receiveBonusThrow('white'); break;

case 'BB': self.receiveBonusThrow('black'); break;

case 'NR': self.newRating(data); break;

default:

}

};

That pretty much covers how to use the Google Channel API in a multiplayer game. The good thing is I found it worked across all the platforms I tested, and at lower volumes it's completely free to use on the Google AppEngine. So you can get something up and running without spending any cash, only if it takes off do you need to front up some mulah. There are many other aspects to the game which I could elaborate if readers desire, but this has provided a good introduction to using the Channel API in your own game. Try it out:

PS: I recommend the site HTML5Games to see the latest in HTML Indie Gaming.