snap is a package format for faster release cycle on Ubuntu. Recently its snapcraft.yml description file has got license field:

Which led me to discover corporate looking SPDX website that aims to set standard on package metadata. And this metadata doesn't allow public domain code:

https://wiki.spdx.org/view/Legal_Team/Decisions/Dealing_with_Public_Domain_within_SPDX_Files

I expected that such project would be equally inclusive to protect the rights of people to release their code without copyright regulations. I would expect it to work with corporate and public services to enable this option, clarify its meaning and champion changes in legislation. But instead this Linux Foundation project decides that public domain code is too bothersome to deal with.

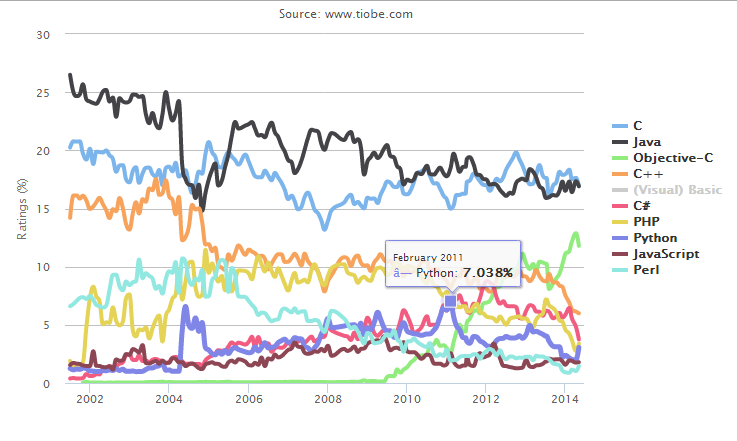

Most of my Python code is released as public domain. I like the total freedom of not being forced to deal with strings attached, and I want to give this freedom to people who use my code. I don't even want this code to be "my". I do not need copyright at all. Giving credits to people and supporting those who need support - this activity doesn't need copyright. Technology can make it automated. Being present in copyright header doesn't mean that people care. This problem copyright can not fix.

Copyright was invented before the internet, 300 hundred years ago, before git and Mercurial, and lawyers are still (ab)using it in their legal warfare, turning it against those who could show how we could use technology to build a better world, with less stress and more transparency. With the next generation mentality of the internet children, it is possible to create a next generation of ecosystem with cooperative gameplay and better ecological goals. We really need to ask each other personally - "What do you want? What do you need?" - and help each other grow internally - not externally. Collaborate on building better systems, better code, instead of squabbling on what's already been written.

Starting with myself - What did I want? I wanted recognition. I didn't want money. Copyright didn't help me to get recognition. Recognition came from my social activity, from interacting with people, building open source, collaborating together, trying to solve some problems in a better way. Many years later recognition came from inside. From mindfulness, and that was a huge relief - not trying to compete with people and show off to seem better on outside. Copyright didn't help me to get money - they came from solving problems for other people without adding new ones. Money came as a result of interacting with others over the things that I found challenging and interesting enough.

I've been paid money, because I can not survive without them, and my network was responsive to my need. I didn't write CV letters or applied to job fairs or went by advertisement in factories. Once I've become transparent to the network with open hands to be helpful, the money found their flow to compensate me for my time. In seven years I signed only one legal paper, one contract with NDA, and that was the only case when I was not paid after finishing the job. I don't want to live in your legal network - I want to be left in mine. In the network where we transparent and don't attack each other with long legal "electronic documents" that are just a scanned paper. I want to live in a netowrk where my will is a signed data structure on a public blockchain, where people are connected and understand what being a human is not easy, and appreciate our nature and support each other towards greater goal.

I don't mind people playing the copyright game. People need games to play. But I do not approve it. Suing programmers for their pet projects that got more popular than mundane office project, taking money for access to scientific papers and not paying scientists who produced them. I am tired, I am scared, because I am paranoid, and I want to opt-out from this system.

I needed support and I joined the Gratipay project. In Gratipay I didn't do much, but in the process I learned to support other people. People whose code I like, people I admire, or people who experienced difficulties, or just people who know how to have fun. I started contributing to Gratipay, because it was public domain and it was completely transparent down to the last penny - I could leave at any point and use the code that I learned in my other projects. I tried to make it better and contribute back. I learned a lot and the more questions I asked, the more interesting things I learned. About people, about economics, about financial gameplay. That was an awesome time and a great experience. I wish that everybody in their live could join a project like this. Helping completely open and transparent company. Gratipay could not survive in U.S., and now its public domain code is serving Liberapay in France.

Public domain was the only way to opt-out from copyright, and with the decision to abandon the fight for it, Linux Foundation closes the door for me to share my code with no strings attached. Treating public domain works "the same way as any license" is the worst decision the SPDX project could take. Public domain is in the "conflict of interests" zone of copyright lawyers, because it is about ignoring their domain, about the right not to care about their business and not being touched by their activities. The task for protecting public domain is for true legal hackers, the ones who go beyond compliance to see the people on the other end. Because there is always a person behind Twitter handle or GitHub account and the person means a little bit more than a "copyright holder".

SPDX raised an important point - Public Domain is not copyright. The right programmatic solution is it to add a separate copyright-optout: true field that is mutually exclusive with license, because this semantic is completely different from the paper based kludges and cargo cults of the past. It cleans up the space for the next generation of internet connected people to ask questions - how do we give credits and how do we support other people in the age of technology.

Please fight for Public Domain - it may appear more important for positive development of technology than we currently see, and it may provide a foundation for better bringing us together.

# License for the snap content, based on SPDX license expressions.

license: <expression>Which led me to discover corporate looking SPDX website that aims to set standard on package metadata. And this metadata doesn't allow public domain code:

https://wiki.spdx.org/view/Legal_Team/Decisions/Dealing_with_Public_Domain_within_SPDX_Files

I expected that such project would be equally inclusive to protect the rights of people to release their code without copyright regulations. I would expect it to work with corporate and public services to enable this option, clarify its meaning and champion changes in legislation. But instead this Linux Foundation project decides that public domain code is too bothersome to deal with.

Most of my Python code is released as public domain. I like the total freedom of not being forced to deal with strings attached, and I want to give this freedom to people who use my code. I don't even want this code to be "my". I do not need copyright at all. Giving credits to people and supporting those who need support - this activity doesn't need copyright. Technology can make it automated. Being present in copyright header doesn't mean that people care. This problem copyright can not fix.

Copyright was invented before the internet, 300 hundred years ago, before git and Mercurial, and lawyers are still (ab)using it in their legal warfare, turning it against those who could show how we could use technology to build a better world, with less stress and more transparency. With the next generation mentality of the internet children, it is possible to create a next generation of ecosystem with cooperative gameplay and better ecological goals. We really need to ask each other personally - "What do you want? What do you need?" - and help each other grow internally - not externally. Collaborate on building better systems, better code, instead of squabbling on what's already been written.

Starting with myself - What did I want? I wanted recognition. I didn't want money. Copyright didn't help me to get recognition. Recognition came from my social activity, from interacting with people, building open source, collaborating together, trying to solve some problems in a better way. Many years later recognition came from inside. From mindfulness, and that was a huge relief - not trying to compete with people and show off to seem better on outside. Copyright didn't help me to get money - they came from solving problems for other people without adding new ones. Money came as a result of interacting with others over the things that I found challenging and interesting enough.

I've been paid money, because I can not survive without them, and my network was responsive to my need. I didn't write CV letters or applied to job fairs or went by advertisement in factories. Once I've become transparent to the network with open hands to be helpful, the money found their flow to compensate me for my time. In seven years I signed only one legal paper, one contract with NDA, and that was the only case when I was not paid after finishing the job. I don't want to live in your legal network - I want to be left in mine. In the network where we transparent and don't attack each other with long legal "electronic documents" that are just a scanned paper. I want to live in a netowrk where my will is a signed data structure on a public blockchain, where people are connected and understand what being a human is not easy, and appreciate our nature and support each other towards greater goal.

I don't mind people playing the copyright game. People need games to play. But I do not approve it. Suing programmers for their pet projects that got more popular than mundane office project, taking money for access to scientific papers and not paying scientists who produced them. I am tired, I am scared, because I am paranoid, and I want to opt-out from this system.

I needed support and I joined the Gratipay project. In Gratipay I didn't do much, but in the process I learned to support other people. People whose code I like, people I admire, or people who experienced difficulties, or just people who know how to have fun. I started contributing to Gratipay, because it was public domain and it was completely transparent down to the last penny - I could leave at any point and use the code that I learned in my other projects. I tried to make it better and contribute back. I learned a lot and the more questions I asked, the more interesting things I learned. About people, about economics, about financial gameplay. That was an awesome time and a great experience. I wish that everybody in their live could join a project like this. Helping completely open and transparent company. Gratipay could not survive in U.S., and now its public domain code is serving Liberapay in France.

Public domain was the only way to opt-out from copyright, and with the decision to abandon the fight for it, Linux Foundation closes the door for me to share my code with no strings attached. Treating public domain works "the same way as any license" is the worst decision the SPDX project could take. Public domain is in the "conflict of interests" zone of copyright lawyers, because it is about ignoring their domain, about the right not to care about their business and not being touched by their activities. The task for protecting public domain is for true legal hackers, the ones who go beyond compliance to see the people on the other end. Because there is always a person behind Twitter handle or GitHub account and the person means a little bit more than a "copyright holder".

SPDX raised an important point - Public Domain is not copyright. The right programmatic solution is it to add a separate copyright-optout: true field that is mutually exclusive with license, because this semantic is completely different from the paper based kludges and cargo cults of the past. It cleans up the space for the next generation of internet connected people to ask questions - how do we give credits and how do we support other people in the age of technology.

Please fight for Public Domain - it may appear more important for positive development of technology than we currently see, and it may provide a foundation for better bringing us together.