September 7, 2018

Python - Using Chrome Extensions With Selenium WebDriver

January 11, 2014

Python - Fixing My Photo Library Dates (Exif Metadata)

I have a large image library of photos I've taken or downloaded over the years. They are from various cameras and sources, many with missing or incomplete Exif metadata.

This is problematic because some image viewing programs and galleries use metadata to sort images into timelines. For example, when I view my library in Dropbox Photos timeline, images with missing Exif date tags are not displayed.

To remedy this, I wrote a Python script to fix the dates in my photo library. It uses gexiv2, which is a wrapper around the Exiv2 photo metadata library.

The scipt will:

- recursively scan a directory tree for jpg and png files

- get each file's creation time

- convert it to a timestamp string

- set Exif.Image.DateTime tag to timestamp

- set Exif.Photo.DateTimeDigitized tag to timestamp

- set Exif.Photo.DateTimeOriginal tag to timestamp

- save file with modified metadata

- set file access and modified times to file creation time

* Note: it does modifications in-place.

The Code:

October 22, 2013

deadsnakes - Using Old Versions of Python on Ubuntu

How do you install an older version of Python on Ubuntu without building it yourself?

The Python packages in the official Ubuntu archives generally don't go back all that far, but people might still need to develop and test against these old Python interpreters. Felix Krull maintains a PPA (package archive) of older Python versions that are easy to install on Ubuntu.

see: https://launchpad.net/~fkrull/+archive/deadsnakes

Currently supported Python releases: 2.4, 2.5, 2.6, 2.7, 3.1, 3.2, 3.3

Instructions:

Add the deadsnakes repository:

$ sudo add-apt-repository ppa:fkrull/deadsnakes

Run Update:

$ sudo apt-get update

Install an older version of Python:

$ sudo apt-get install python2.6 python2.6-dev

June 22, 2013

Generating Audio Spectrograms in Python

A spectrogram is a visual representation of the spectrum of frequencies in a sound sample.

more info: wikipedia spectrogram

Spectrogram code in Python, using Matplotlib:

(source on GitHub)

"""Generate a Spectrogram image for a given WAV audio sample.

A spectrogram, or sonogram, is a visual representation of the spectrum

of frequencies in a sound. Horizontal axis represents time, Vertical axis

represents frequency, and color represents amplitude.

"""

import os

import wave

import pylab

def graph_spectrogram(wav_file):

sound_info, frame_rate = get_wav_info(wav_file)

pylab.figure(num=None, figsize=(19, 12))

pylab.subplot(111)

pylab.title('spectrogram of %r' % wav_file)

pylab.specgram(sound_info, Fs=frame_rate)

pylab.savefig('spectrogram.png')

def get_wav_info(wav_file):

wav = wave.open(wav_file, 'r')

frames = wav.readframes(-1)

sound_info = pylab.fromstring(frames, 'Int16')

frame_rate = wav.getframerate()

wav.close()

return sound_info, frame_rate

if __name__ == '__main__':

wav_file = 'sample.wav'

graph_spectrogram(wav_file)

Spectrogram code in Python, using timeside:

(source on GitHub)

"""Generate a Spectrogram image for a given audio sample.

Compatible with several audio formats: wav, flac, mp3, etc.

Requires: https://code.google.com/p/timeside/

A spectrogram, or sonogram, is a visual representation of the spectrum

of frequencies in a sound. Horizontal axis represents time, Vertical axis

represents frequency, and color represents amplitude.

"""

import timeside

audio_file = 'sample.wav'

decoder = timeside.decoder.FileDecoder(audio_file)

grapher = timeside.grapher.Spectrogram(width=1920, height=1080)

(decoder | grapher).run()

grapher.render('spectrogram.png')

happy audio hacking.

June 10, 2013

Python - concurrencytest: Running Concurrent Tests

Add parallel testing to your unit test framework.

In my previous post, I described running concurrent tests using nose as a loader and runner.

On a similar note, let's look at building concurrency into your own test framework built on Python's unittest.

Have a look at this module: concurrencytest

(Thanks to bits and concepts taken from testtools and bzrlib)

An Example:

Say you have a 'TestSuite' of tests loaded. You could run them with the standard 'TextTestRunner' like this:

runner = unittest.TextTestRunner() runner.run(suite)

That would run the tests in your suite sequentially in a single process.

By adding the concurrencytest module, you can use a 'ConcurrentTestSuite' instead, by adding:

from concurrencytest import ConcurrentTestSuite, fork_for_tests concurrent_suite = ConcurrentTestSuite(suite, fork_for_tests(4)) runner.run(concurrent_suite)

That would run the same tests split across 4 processes (workers).

Note: this relies on 'os.fork()' which only works on Unix systems.

There's no way to understand this better than looking at some contrived examples!

This first example is totally unrealistic, but shows off concurrency perfectly. The test cases it loads each sleep for 0.5 seconds and then exit.

The Code:

Output:

Loaded 50 test cases... Run tests sequentially: .................................................. ---------------------------------------------------------------------- Ran 50 tests in 25.031s OK Run same tests across 50 processes: .................................................. ---------------------------------------------------------------------- Ran 50 tests in 0.525s OK

nice!

Now another example that shows concurrency with CPU-bound test cases. The test cases it loads each calculate fibonacci of 31 (recursively!) and then exit. We can see how it performs on my 8-core machine (Core2 i7 quad, hyperthreaded).

The Code:

Output:

Loaded 50 test cases... Run tests sequentially: .................................................. ---------------------------------------------------------------------- Ran 50 tests in 21.941s OK Run same tests with 2 processes: .................................................. ---------------------------------------------------------------------- Ran 50 tests in 11.081s OK Run same tests with 4 processes: .................................................. ---------------------------------------------------------------------- Ran 50 tests in 5.862s OK Run same tests with 8 processes: .................................................. ---------------------------------------------------------------------- Ran 50 tests in 4.743s OK

happy hacking.

June 9, 2013

Python - Nose: Running Concurrent Tests

TLDR:

To enable multiprocessing with N workers,

run nose with:

$ nosetests --processes=N

When writing tests in Python, I start with TestCase's derived from unittest.TestCase, and standard test discovery. When I need more complex test discovery/loading or output reports, I often use nose and its assortment of plugins as my test loader/runner.

One nice feature of nose is the multiprocess plugin. It allows you to run your tests suites concurrently rather than sequentially, spread across a number of worker processes. Running tests in parallel like this can potentially give you a large speedup in your test run times.

from the nose multiprocess docs:

"You can parallelize a test run across a configurable number of worker processes. While this can speed up CPU-bound test runs, it is mainly useful for IO-bound tests that spend most of their time waiting for data to arrive from someplace else and can benefit from parallelization."

Normally, you run tests from nose with:

$ nosetests

To run the same tests split across 4 processes (workers), you would just do:

$ nosetests --processes=4

Assuming your tests are properly isolated, everything should run normally, and you can benefit from a speedup on a multiprocessor machine.

However, Beware.

"Not all test suites will benefit from, or even operate correctly using, this plugin. For example, CPU-bound tests will run more slowly if you don't have multiple processors."

"But the biggest issue you will face is probably concurrency. Unless you have kept your tests as religiously pure unit tests, with no side-effects, no ordering issues, and no external dependencies, chances are you will experience odd, intermittent and unexplainable failures and errors when using this plugin. This doesn't necessarily mean the plugin is broken; it may mean that your test suite is not safe for concurrency."

March 28, 2013

Python - Re-tag FLAC Audio Files (Update Metadata)

I had a bunch of FLAC (.flac) audio files together in a directory. They are from various sources and their metadata (tags) were somewhat incomplete or incorrect.

I managed to manually get all of the files standardized in "%Artist% - %Title%.flac" file name format. However, What I really wanted was to clear their metadata and just save "Artist" and "Title" tags, pulled from file names.

I looked at a few audio tagging tools in the Ubuntu repos, and came up short finding something simple that covered my needs. (I use Audio Tag Tool for MP3's, but it has no FLAC file support.)

So, I figured the easiest way to get this done was a quick Python script.

I grabbed Mutagen, a Python module to handle audio metadata with FLAC support.

This is essentially the task I was looking to do:

#!/usr/bin/env python

import glob

import os

from mutagen.flac import FLAC

for filename in glob.glob('*.flac'):

artist, title = os.path.splitext(filename)[0].split(' - ', 1)

audio = FLAC(filename)

audio.clear()

audio['artist'] = artist

audio['title'] = title

audio.save()

It iterates over .flac files in the current directory, clearing the metadata and rewriting only the artist/title tags based on each file name.

I created a repository with a slightly more full-featured version, used to re-tag single FLAC files:

https://github.com/cgoldberg/audioscripts/blob/master/flac_retag.py

January 28, 2013

Python - verify a PNG file and get image dimensions

useful snippet for getting .png image dimensions without using an external imaging library.

#!/usr/bin/env python

import struct

def get_image_info(data):

if is_png(data):

w, h = struct.unpack('>LL', data[16:24])

width = int(w)

height = int(h)

else:

raise Exception('not a png image')

return width, height

def is_png(data):

return (data[:8] == '\211PNG\r\n\032\n'and (data[12:16] == 'IHDR'))

if __name__ == '__main__':

with open('foo.png', 'rb') as f:

data = f.read()

print is_png(data)

print get_image_info(data)

/headnods:

getimageinfo.py source, Portable_Network_Graphics (Wikipedia)

January 23, 2013

Python Unit Testing Tutorial (PyMOTW unittest update)

tl;dr: an update to PyMOTW for `unittest` in Python 3: Python Unit Testing Tutorial.

When I was learning programming in Python, Doug Hellmann's 'PyMOTW' (Python Module Of The Week) blog-series was one of the best resources to learn Python's standard library.

His series later culminated in the book: 'The Python Standard Library By Example'.

The PyMOTW entry on `unittest` was a great introduction to unit testing in Python. Since the PyMOTW version is getting quite outdated, I updated the `unittest` module entry.

This new version includes some edits and updates to the text, and all code and examples have been updated to reflect Python 3.3.

Have a look at my updated Python 3.3 version:

'Python Unit Testing Tutorial'

license: Creative Commons Attribution, Non-commercial, Share-alike 3.0

say no to bugs...

January 14, 2013

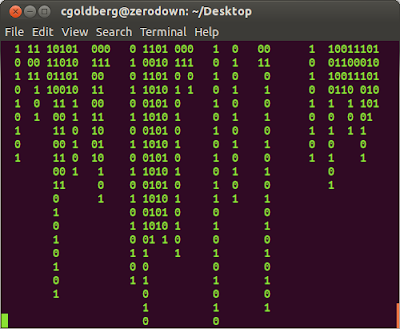

Python - "The Matrix" in your terminal

Linux console program in Python, that scrolls binary numbers vertically in your terminal. Inspired by the movie: The Matrix

a screenshot:

in action:

the Code:

https://gist.github.com/4530348January 6, 2013

Python Testing - PhantomJS with Selenium WebDriver

PhantomJS is a headless WebKit with JavaScript API. It can be used for headless website testing.

PhantomJS has a lot of different uses. The interesting bit for me is to use PhantomJS as a lighter-weight replacement for a browser when running web acceptance tests. This enables faster testing, without a display or the overhead of full-browser startup/shutdown.

I write my web automation using Selenium WebDriver, in Python.

In future versions of PhantomJS, the GhostDriver component will be included.

GhostDriver is a pure JavaScript implementation of the WebDriver Wire Protocol for PhantomJS. It's a Remote WebDriver that uses PhantomJS as back-end.

So, Ghostdriver is the bridge we need to use Selenium WebDriver with Phantom.JS.

Since it is not available in the current PhantomJS release, you can try it yourself by compiling a special version of PhantomJS:

It wes pretty trvial to setup on Ubuntu (12.04):

$ sudo apt-get install build-essential chrpath git-core libssl-dev libfontconfig1-dev $ git clone git://github.com/ariya/phantomjs.git $ cd phantomjs $ git checkout 1.8 $ ./build.sh $ git remote add detro https://github.com/detro/phantomjs.git $ git fetch detro && git checkout -b detro-ghostdriver-dev remotes/detro/ghostdriver-dev $ ./build.sh

Then grab the `phantomjs` binary it produced (look inside `phantomjs/bin`). This is a self-contained executable, it can be moved to a different directory or another machine. Make sure it is located somewhere on your PATH, or declare it's location when creating your PhantomJS driver like the example below.

for these examples, `phantomjs` binary is located in same directory as test script.

Example: Python Using PhantomJS and Selenium WebDriver.

#!/usr/bin/env python

driver = webdriver.PhantomJS('./phantomjs')

# do webdriver stuff here

driver.quit()

Example: Python Unit Test Using PhantomJS and Selenium WebDriver.

#!/usr/bin/env python

import unittest

from selenium import webdriver

class TestUbuntuHomepage(unittest.TestCase):

def setUp(self):

self.driver = webdriver.PhantomJS('./phantomjs')

def testTitle(self):

self.driver.get('http://www.ubuntu.com/')

self.assertIn('Ubuntu', self.driver.title)

def tearDown(self):

self.driver.quit()

if __name__ == '__main__':

unittest.main(verbosity=2)

resources:

June 17, 2012

Python Timer Class - Context Manager for Timing Code Blocks

Here is a handy Python Timer class. It creates a context manager object, used for timing a block of code.

from timeit import default_timer

class Timer(object):

def __init__(self, verbose=False):

self.verbose = verbose

self.timer = default_timer

def __enter__(self):

self.start = self.timer()

return self

def __exit__(self, *args):

end = self.timer()

self.elapsed_secs = end - self.start

self.elapsed = self.elapsed_secs * 1000 # millisecs

if self.verbose:

print 'elapsed time: %f ms' % self.elapsed

To use the Timer (context manager object), invoke it using Python's `with` statement. The duration of the context (code inside your `with` block) will be timed. It uses the appropriate timer for your platform, via the `timeit` module.

Timer is used like this:

with Timer() as target:

# block of code goes here.

# result (elapsed time) is stored in `target` properties.

Example script:

timing a web request (HTTP GET), using the `requests` module.

#!/usr/bin/env python

import requests

from timer import Timer

url = 'https://github.com/timeline.json'

with Timer() as t:

r = requests.get(url)

print 'fetched %r in %.2f millisecs' % (url, t.elapsed)

Output:

fetched 'https://github.com/timeline.json' in 458.76 millisecs

`timer.py` in GitHub Gist form, with more examples:

June 4, 2012

History of Python - Development Visualization - Gource

I made a new visualization. Have a look!

History of Python - Gource - development visualization (august 1990 - june 2012)

[HD video, encoded at 1080p. watch on YouTube in highest resolution possible.]

What is it?

This is a visualization of Python core development. It shows growth of the Python project's source code over time (August 1990 - June 2012). Nearly 22 years! The source code history and relations are displayed by Gource as an animated tree, tracking commits over time. Directories appear as branches with files as leaves. Developers can be seen working on the tree at the times they contributed to the Python project.

Video:

Rendered with Gource v0.37 on Ubuntu 12.04

Music:

Chris Zabriskie - The Life and Death of a Certain K Zabriskie Patriarch

Repository:

cpython 3.3.0 alpha, retrieved from mercurial on June 2 2012

for more visualizations and other videos, check out my YouTube channel.

April 23, 2012

SST 0.2.1 Release Announcement (selenium-simple-test)

SST version 0.2.1 has been released.

SST (selenium-simple-test) is a web test framework that uses Python to generate functional browser-based tests.

SST version 0.2.1 is on PyPI: http://pypi.python.org/pypi/sst

install or upgrade with:

pip install -U sst

Changelog: http://testutils.org/sst/changelog.html

SST Docs: http://testutils.org/sst

SST on Launchpad: https://launchpad.net/selenium-simple-test

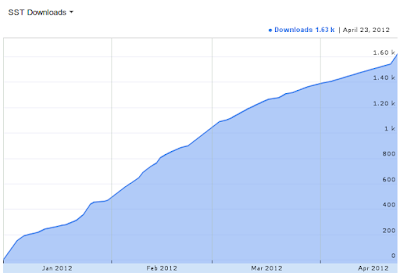

SST downloads | (Jan 1 2012 - April 23 2012)

1600+ downloads from PyPI since initial release.

April 9, 2012

Python - Getting Data Into Graphite - Code Examples

This post shows code examples in Python (2.7) for sending data to Graphite.

Once you have a Graphite server setup, with Carbon running/collecting, you need to send it data for graphing.

Basically, you write a program to collect numeric values and send them to Graphite's backend aggregator (Carbon).

To send data, you create a socket connection to the graphite/carbon server and send a message (string) in the format:

"metric_path value timestamp\n"

- `metric_path`: arbitrary namespace containing substrings delimited by dots. The most general name is at the left and the most specific is at the right.

- `value`: numeric value to store.

- `timestamp`: epoch time.

- messages must end with a trailing newline.

- multiple messages maybe be batched and sent in a single socket operation. each message is delimited by a newline, with a trailing newline at the end of the message batch.

Example message:

"foo.bar.baz 42 74857843\n"

Let's look at some (Python 2.7) code for sending data to graphite...

Here is a simple client that sends a single message to graphite.

Code:

#!/usr/bin/env python import socket import time CARBON_SERVER = '0.0.0.0' CARBON_PORT = 2003 message = 'foo.bar.baz 42 %d\n' % int(time.time()) print 'sending message:\n%s' % message sock = socket.socket() sock.connect((CARBON_SERVER, CARBON_PORT)) sock.sendall(message) sock.close()

Here is a command line client that sends a single message to graphite:

Usage:

$ python client-cli.py metric_path value

Code:

#!/usr/bin/env python

import argparse

import socket

import time

CARBON_SERVER = '0.0.0.0'

CARBON_PORT = 2003

parser = argparse.ArgumentParser()

parser.add_argument('metric_path')

parser.add_argument('value')

args = parser.parse_args()

if __name__ == '__main__':

timestamp = int(time.time())

message = '%s %s %d\n' % (args.metric_path, args.value, timestamp)

print 'sending message:\n%s' % message

sock = socket.socket()

sock.connect((CARBON_SERVER, CARBON_PORT))

sock.sendall(message)

sock.close()

Here is a client that collects load average (Linux-only) and sends a batch of 3 messages (1min/5min/15min loadavg) to graphite. It will run continuously in a loop until killed. (adjust the delay for faster/slower collection interval):

#!/usr/bin/env python

import platform

import socket

import time

CARBON_SERVER = '0.0.0.0'

CARBON_PORT = 2003

DELAY = 15 # secs

def get_loadavgs():

with open('/proc/loadavg') as f:

return f.read().strip().split()[:3]

def send_msg(message):

print 'sending message:\n%s' % message

sock = socket.socket()

sock.connect((CARBON_SERVER, CARBON_PORT))

sock.sendall(message)

sock.close()

if __name__ == '__main__':

node = platform.node().replace('.', '-')

while True:

timestamp = int(time.time())

loadavgs = get_loadavgs()

lines = [

'system.%s.loadavg_1min %s %d' % (node, loadavgs[0], timestamp),

'system.%s.loadavg_5min %s %d' % (node, loadavgs[1], timestamp),

'system.%s.loadavg_15min %s %d' % (node, loadavgs[2], timestamp)

]

message = '\n'.join(lines) + '\n'

send_msg(message)

time.sleep(DELAY)

Resources:

April 7, 2012

Installing Graphite 0.9.9 on Ubuntu 12.04 LTS

I just setup a Graphite server on Ubuntu 12.04 (Precise).

Here are some instructions for getting it all working (using Apache as web server).

It follows these steps:

- install system dependencies (apache, django, dev libs, etc)

- install Whisper (db lib)

- install and configure Carbon (data aggregator)

- install Graphite (django webapp)

- configure Apache (http server)

- create initial database

- start Carbon (data aggregator)

Once that is done, you should be able to visit the host in your web browser and see the Graphite UI.

Setup Instructions:

#############################

# INSTALL SYSTEM DEPENDENCIES

#############################

$ sudo apt-get install apache2 libapache2 libapache2-mod-wsgi /

libapache2-mod-python memcached python-dev python-cairo-dev /

python-django python-ldap python-memcache python-pysqlite2 /

python-pip sqlite3 erlang-os-mon erlang-snmp rabbitmq-server

$ sudo pip install django-tagging

#################

# INSTALL WHISPER

#################

$ sudo pip install http://launchpad.net/graphite/0.9/0.9.9/+download/whisper-0.9.9.tar.gz

################################################

# INSTALL AND CONFIGURE CARBON (data aggregator)

################################################

$ sudo pip install http://launchpad.net/graphite/0.9/0.9.9/+download/carbon-0.9.9.tar.gz

$ cd /opt/graphite/conf/

$ sudo cp carbon.conf.example carbon.conf

$ sudo cp storage-schemas.conf.example storage-schemas.conf

###########################

# INSTALL GRAPHITE (webapp)

###########################

$ sudo pip install http://launchpad.net/graphite/0.9/0.9.9/+download/graphite-web-0.9.9.tar.gz

or

$ wget http://launchpad.net/graphite/0.9/0.9.9/+download/graphite-web-0.9.9.tar.gz

$ tar -zxvf graphite-web-0.9.9.tar.gz

$ mv graphite-web-0.9.9 graphite

$ cd graphite

$ sudo python check-dependencies.py

$ sudo python setup.py install

##################

# CONFIGURE APACHE

##################

$ cd graphite/examples

$ sudo cp example-graphite-vhost.conf /etc/apache2/sites-available/default

$ sudo cp /opt/graphite/conf/graphite.wsgi.example /opt/graphite/conf/graphite.wsgi

$ sudo mkdir /etc/httpd

$ sudo mkdir /etc/httpd/wsgi

$ sudo /etc/init.d/apache2 reload

#########################

# CREATE INITIAL DATABASE

#########################

$ cd /opt/graphite/webapp/graphite/

$ sudo python manage.py syncdb

$ sudo chown -R www-data:www-data /opt/graphite/storage/

$ sudo /etc/init.d/apache2 restart

$ sudo cp local_settings.py.example local_settings.py

################################

# START CARBON (data aggregator)

################################

$ cd /opt/graphite/

$ sudo ./bin/carbon-cache.py start

Resources:

- http://graphite.readthedocs.org/en/latest/install.html

- http://graphite.wikidot.com/installation

- http://geek.michaelgrace.org/2011/09/how-to-install-graphite-on-ubuntu/

* works on my machine, Ubuntu 12.04

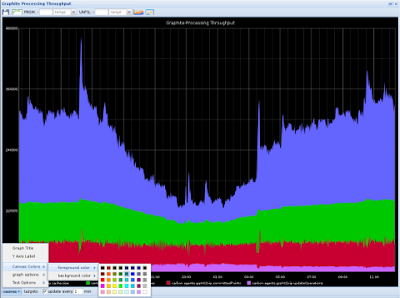

Python - Graphite: Storage and Visualization of Time-series Data

I'm doing some work with Graphite in Python. Here is a quick overview of what Graphite is...

Graphite provides real-time visualization and storage of numeric time-series data.

Links:

- Project: https://launchpad.net/graphite

- Docs: http://graphite.readthedocs.org

Graphite does two things:

- Store numeric time-series data

- Render graphs of this data on demand

Graphite consists of a storage backend and a web-based visualization frontend. Client applications send streams of numeric time-series data to the Graphite backend (called carbon), where it gets stored in fixed-size database files similar in design to RRD. The web frontend provides 2 distinct user interfaces for visualizing this data in graphs as well as a simple URL-based API for direct graph generation.

Graphite consists of 3 software components:

- carbon - a Twisted daemon that listens for time-series data

- whisper - a simple database library for storing time-series data (similar in design to RRD)

- graphite webapp - A Django webapp that renders graphs on-demand using Cairo

April 5, 2012

Python Book Giveaway - Boston Python User Group - April 12, 2012

I am bringing some of my [lightly read] Python books to give away at the next Boston Python User Group meetup.

Boston Python User Group - April Project Night

When: Thursday, April 12, 2012, 6:30 PM

Where: Microsoft NERD, Cambridge

Books:

- Pro Python (Alchin)

- Head First Python (Barry)

- Programming in Python 3 (Summerfield)

- Python Programming Patterns (Christopher)

- Hello World! (Sande)

If you are interested in getting a free book, come to #bostonpython Project Night on April 12!

March 24, 2012

Python Screencast: Install/Setup "SST Web Test Framework" on Ubuntu 12.04

I uploaded a 5 minute video/screencast showing how to install and setup SST Web Test Framework on Ubuntu (Precise Pangolin 12.04).

I step through: creating a virtualenv, installing SST from PyPI, and creating a basic automated web test:

http://www.youtube.com/watch?v=LpSvGmglZPIthe following steps are essentially a transcript of what I did...

install system package dependencies:

$ sudo apt-get install python-virtualenv xvfb

create a "virtualenv":

$ virtualenv ENV

active the virtualenv:

$ cd ENV $ source bin/activate (ENV)$

* notice your prompt changed, signifying the virtualenv is active

install SST using `pip`:

(ENV)$ pip install sst

Now SST is installed. You can check the version of SST:

$ sst-run -V

March 8, 2012

Codeswarm - Python Core Development Visualization

particle visualization of Python core development commits: Jan 1, 2010 - Mar 06, 2012

http://www.youtube.com/watch?v=IQPuU_YtN8Qdata source is the commit log from cpython mercurial trunk:

$ hg clone http://hg.python.org/cpython

... trimmed to show development activity since Jan 1, 2010.

(images making up this video were rendered with: Codeswarm)